About

My name is Dmitry Andreev, founder of inciprocal Inc. I fell in love with video games, graphics, and interactive experiences when I was a kid, dreaming that one day it would be possible to have a real-world simulation that would be indistinguishable from reality, where things could be done that are not possible in real life, or “what if” ideas quickly could quickly and easily be tried out in various fields of art, design, and production. I’ve been involved in demo scenes that led to my career in the video game industry, working with a lot of great artists, engineers, and designers at such studios as LucasArts and Electronic Arts. For the past 20 years, I worked on various AAA titles before starting inciprocal inc. and focusing on photo-realistic data acquisition technology.

Games and movies

Being mostly involved with all aspects of real-time graphics, it was a great thing to see the video game and movie industries converge. On the one hand, graphics hardware and algorithms got powerful enough to be able to render very realistic scenery in real-time. On the other hand, we got decades of experience creating photo-realistic motion pictures along with production best practices. Games took a lot from the movies and VFX industries and a few years ago we started to see more and more real-time tech being used for various productions across many studios.

The greater degree of quality and realism inevitably led to increased production costs, getting to the point where it was either too expensive to produce or you needed to drop the quality. At some point, it became clear that with a bunch of tricks here and there you could pretty much get the same image in terms of visual fidelity using Frostbite, Unreal Engine, Unity, or Sketchfab when compared to offline physically-based path tracing. But in order to keep pushing for realism and production value, one had to focus on data, how it gets acquired, processed, and rendered.

State of digital asset scanning

When it comes to creating real-world doubles with scanning there are three main ways to do it: Photogrammetry, Structured Light, and Photometry, as well as various AI “enhanced” variations of these. Of course, there are also LiDAR, lasers, light fields, and more.

Photogrammetry reconstructs geometry based on textural features that are visible from multiple points of view. But the technique fails in cases when there are no distinct features present and the software can’t derive any representable material properties other than just diffuse albedo, when proper cross-polarization is utilized. Additionally, it tends to introduce a lot of noise or detail into geometry that is not representative of the actual surface that light interacts with; these details and noise might require a lot of manual scan cleaning in tools like ZBrush.

Structured light techniques, however, are based on projecting certain patterns and then analyzing how those get distorted by the surface to reconstruct the geometry. This technique can be quite accurate and doesn’t depend so much on textural detail—though it might have problems with alignment and registration in some cases, especially for materials that exhibit substantial sub-surface scattering properties. When it works, structured light requires relatively little geometry cleaning but it is not useful for photo-realistic rendering applications out of the box.

Photometry has been used extensively in the VFX industry for a long time to produce accurate doubles for humans, human heads, and faces. It is based on observing the same surface under a lot of different lighting conditions and reconstructing underlying material properties. Though it produces great shading accuracy and high-quality surface detail, it does not lead to direct reconstruction of the base geometry mesh by itself and relies on other techniques. Even though it’s possible to optimize for specialized surfaces like that of skin, general-purpose cases such as metals and common dielectrics are challenging.

Addressing many of the issues related to rapid and accurate asset reproduction for use in photo-realistic applications led us to rethink and redesign the whole scanning solution from the ground up, combining various techniques together in a unified fashion where various stages guide and help one another.

Inciprocal Scanning Process

While advanced hardware and software technologies are important, the best results are achieved when scanning procedures are closely integrated with other parts of the process. One unique requirement of our process that leads to accurate reconstruction of material properties is exposure of the source material to a lot of lighting conditions as well as different view perspectives. In many cases that means using various suspension and turntable rigs with custom 3D printed stands and holders.

Various perspectives that have hundreds of other shots associated with them (Diffuse + Specular shown)

Various perspectives that have hundreds of other shots associated with them (Diffuse + Specular shown)Each position and orientation of an object would have a lot of different camera views under different lighting conditions along with many more projected light patterns associated with them. It’s a trade-off in order to get as clean geometric data as possible in order to minimize manual cleaning afterward. Depending on surface properties, special projections patterns and software techniques get involved in order to tap into the interior of an object, to see how light propagates inside of it. In some cases, HDR photography is required when the range of surface intensities is too wide. In other cases, it is required to place some markers to guide registration, spray things partially or go through air conditioning cycles in order to have the prop covered in moisture to make surface interfaces visible to lights and cameras.

Various lighting conditions are photographed to accurately estimate shading properties of the surface

Various lighting conditions are photographed to accurately estimate shading properties of the surfaceDepending on material and geometry complexity, the acquisition process might take somewhere between 5 to 30 minutes while in some very special and unusual cases up to several hours. That part of the process is highly automated except when you need to flip the prop or exchange various turntable heads. The end result of the data acquisition process is thousands and tens of thousands of high-resolution pictures of that prop that get further processed on the GPUs.

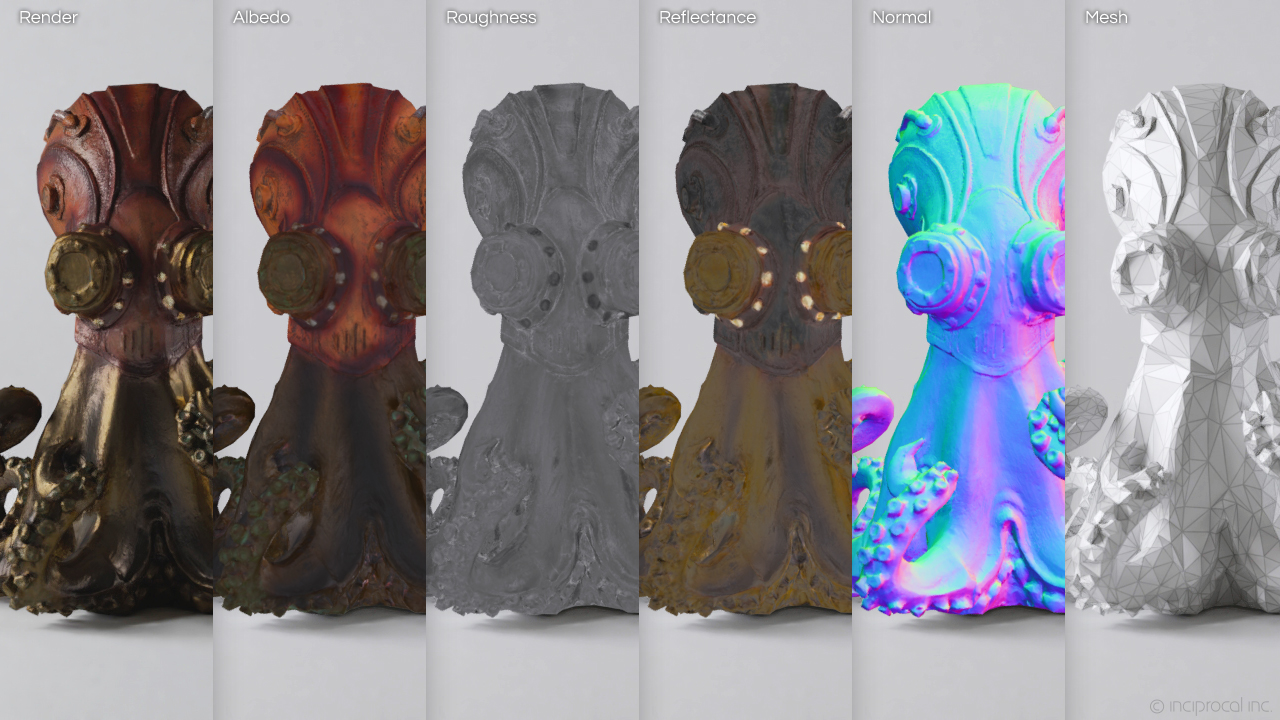

Due to the unique nature of combining various depth and geometry reconstruction techniques that are intelligently guided by material property pre-estimation, resulting point clouds that have a lot of advanced metadata associated with them go through a more or less standard pipeline of polygonal mesh reconstruction, decimation, and UV mapping. Due to the fact that most of the photo-realism comes from capturing and reproducing light and matter interaction, most of the emphasis is put on textural rather than geometric detail. Automatic analysis of source footage results in various parametrizations for PBR (Physically Based Rendering) shading model.

Targeting the Disney PBR shading model, which is the most commonly used one in the industry nowadays, we produce Diffuse Albedo, GGX Roughness, Colored GGX F0 Reflectance, GGX and/or Diffuse Normals, and many other channels required for more advanced shading models.

Digital doubles

What makes the inciprocal scanning tech and process unique is the intrinsic ability to accurately replicate light and matter interactions of the source asset in the form of PBR textures such that when the digital asset is rendered under the same lighting conditions as in the reference photo you get the exact same result in a virtual rendered form.

Our automatic QA procedure matches thousands of differently lit images from the scanning process against virtually rendered assets to guarantee accurate reconstruction. Additionally, we run it through a more artistic validation pipeline, employing lighting techniques mostly found in movies and commercials, and heavily rely on softboxes, backdrops, and more.

Whether it’s for a photo-realistic AR/VR application, eCommerce or VFX production, the ultimate goal of a digital double is to seemingly replace the real object in a digital or partially digital scene. The following images demonstrate the power of digital doubles. Real props, at the top, are photographed under artistic lighting conditions and compared to path-traced renders of our low-poly scanned assets, that are available on Sketchfab, under similar lighting conditions at the bottom.

- inciprocal Fruit Collection

- Steampunk Octopus Figurine

- Antique Globe on Stand

Pricing and promotion

The main reason behind our project was to develop a state-of-the-art data acquisition pipeline along with related hardware and software technologies. And the best way to do it was to work on a set of diverse small-scale projects where photorealism, along with high real-time performance, were important. However, contract agreements greatly limit the ability to share and promote such work.

When working with commercial customers there are many ways to defer costs not only on the business side but also in terms of the scanning process. For instance, sorting large batches into props of similar size or similar material types allows us to optimize the rig configuration for those specific parameters and lower the price per scan.

Sketchfab is a great tool when it comes to browsing, previewing, and sharing assets that are suitable for a wide range of photo-realistic real-time applications. So we decided to allocate some resources and start making available some of the commonly used scans as royalty-free on the Sketchfab Store.

Though the real price of a one-off scan could range in the hundreds of dollars due to fixed setup costs and limited batching, we simply went with “coffee cup” units, allowing for additional promotion channels while making a range of commonly used products, in the form of advanced scans, available to a larger audience for just a fraction of their real cost.

You can always email us your scan requests for things you wish were available in our Sketchfab Store.